Summary: This article shows a step-by-step approach for setting up an automated build and deploy framework in IBM® WebSphere® Message Broker (also known as IBM® Integration Bus) using Ant, Hudson and SubVersion. This also includes the set of frameworks components for setting up the auto build and deploy for a sample WMB project .

Broker ARchive(BAR) file:

Broker Archive(BAR) is a deployable container in a compressed file format which contains a single deployment descriptor (broker.xml), compiled message flows(*.cmf), message set dictionary files(*.xsdzip, *.dictionary), style sheets(.xsl), XSLT files and JAR files. When you unzip the BAR file, the single descriptor file can be found under META_INF folder. The deployment descriptor file has information about configuration properties of the flow and nodes.

Sample contents of a deployment descriptor file(broker.xml):

<Broker>

<CompiledMessageFlow name="com.ibm.wmb.build.SampleMsgFlow1">

...

<ConfigurableProperty uri="com.ibm.wmb.build.SampleMsgFlow1#additionalInstances"/>

<ConfigurableProperty override="DB2_DSN_DEV" uri="com.ibm.wmb.build.SampleMsgFlow1#Compute.dataSource"/>

<ConfigurableProperty override="SAMPLE.DEV.IN" uri="com.ibm.wmb.build.SampleMsgFlow1#MQ_Input.queueName"/>

...

</CompiledMessageFlow >

</Broker>

Step 1: Get Source Code from SCM:

Whenever the build is scheduled/triggered in Hudson or any changes detected in SCM, the latest (or desired) version of project code is copied from SCM server to a broker toolkit workspace on Hudson server. There are two different ways in which the Broker source code can be imported to Hudson server.

1. [Typical]Hudson Job will retrieve the source code from SubVersion or any SCM to Hudson server as per SCM configuration in Hudson Job. And then all the resources are copied to broker toolkit workspace using shell script or Ant task. This method is preferred if the SCM configuration is always static and build resources are less.

2. [Custom]Ant script can be written to retrieve the source code from SubVersion or any SCM directly to broker toolkit workspace. This method is preferred if the SCM configuration is dynamic and build resources are selective among huge number of other projects(not required for build) under the same parent. Ant script supports various other SCM including PVCS, CVS, VSS and IBM® ClearCase.

Below sample ant target will check out the code from SubVersion to broker toolkit workspace directly(2nd method).

Note: Ant task SVN requires corresponding library jar files to be loaded prior to execution.

Sample Ant target for importing Broker projects from Subversion:

${broker.build.projects} = SampleMsgFlowProject,SampleMsgSetProject

${source.location} = trunk or tag/... or branch/...

Ant Target:import.broker.projects

<target name="import.broker.projects" description="This ant target copies broker projects files from Subversion to broker toolkit workspace."><for list="${broker.build.projects}" param="var-broker-prj">

<sequential>

<svn javahl="false" svnkit="true" username="${svn.user}" password="${svn.password}">

<checkout url="${svn.url}/@{var-broker-prj}/${source.location}" destPath="${toolkit.workspace}/@{var-broker-prj}" force="true" depth="infinity" ignoreexternals="true"/>

</svn>

</sequential>

</for>

</target>

Step 2: Create BAR file(s):

Message Broker toolkit installation supplies a bunch of workbench command line tools which help in generating deployable BAR files and overriding the configurations inside the BAR file.

An ant task can be configured to execute Broker MQSI workbench command mqsicreatebar for creating the Broker Archive(BAR) file with Broker project resources (Message flow, Message set and its references) retrieved from local broker toolkit workspace. Since this workbench command runs the eclipse in “headless” mode, the execution of this command takes often noticeably longer than time taken when built from Broker toolkit.

If there are multiple BAR files to be created, Ant script can be programmed to execute below mqsicreatebar command in sequence/loop to avoid any issues with lock or workspace issues. Please refer Download files for more details.

mqsicreatebar -data workspace -b barFileName [-version versionId] [-esql21][-p projectName1 [projectName2 […]] -o filePath1 [filePath2 […]] [-skipWSErrorCheck] [-trace] [-v traceFilepath]

Parameters:

-data workspace (Required)

– The path of the Broker toolkit workspace in which your projects are created.

-b barFileName (Required)

– The name of the BAR (compressed file format) archive file where the result is stored. The BAR file is replaced if it already exists and the META-INF/broker.xml file is created.

-cleanBuild (Optional)

– Refreshes the projects in the workspace and then invokes a clean build before new items are added to the BAR file.

-version versionId (Optional)

– Appends the _ (underscore) character and the value of versionId to the names of the compiled versions of the message flows (.cmf) files added to the BAR file, before the file extension.

-Esql21 (Optional)

– Compile ESQL for brokers at Version 2.1 of the product.

-p projectName1 [projectName2 […]] (Optional)

– Projects containing files to include in the BAR file in a new workspace. A new workspace is a system folder without the .metadata folder. The projects defined must already exist in the folder defined in the -data parameter, and must include all projects and their reference projects that a deployable resource, defined in the -o parameter, needs. The -p parameter is optional with an existing workspace, but you should use -p, together with a new workspace, in a build environment. If a project that you specify is part of your workspace but is currently closed, the command opens and builds the project so that the files in the project can be included in the BAR file.-o

filePath1 [filePath2 […]] (Required)

– The workspace relative path (including the project) of a deployable file to add to the BAR file; for example, amsgflow or messageSet.mset file.You can add more than one deployable file to this command by using the following format: -o filePath1 filePath2 ….filePath’n’Note: In Message Broker version 8, the MQSI runtime command mqsipackagebar is used to create the BAR files.

-skipWSErrorCheck (Optional) [From 7.0.0.3 only]

– Forces the BAR file compilation process to run, even if errors exist in the workspace.

-trace (Optional)

– Displays trace information for BAR file compilation. The -trace parameter writes trace information into the system output stream, in the language specified by the system locale.

-v traceFilePath (Optional)

– File name of the output log to which trace information is sent.

Sample Ant target for creating BAR file:

mqsicreatebar -cleanbuild -data ../workspace -b ../Sample.bar -p SampleProject -o com.ibm.poc.build.wmb.SampleMessageFlow.msgflow

${numberOfExecGrps} = 2

${exec_grp1.msg.flows} = SampleMsgFlowProject/com/ibm/wmb/build/SampleMsgFlow1.msgflow, SampleMsgFlowProject/com/ibm/wmb/build/SampleMsgFlow2.msgflow

${exec_grp1.msg.sets} = SampleMsgSetProject/SampleMsgSet/messageSet.mset

${exec_grp2.msg.flows} = SampleMsgFlowProject/com/ibm/wmb/build/SampleMsgFlow3.msgflow

${exec_grp2.msg.sets} = SampleMsgSetProject/SampleMsgSet/messageSet.mset

${version.number} = 1.1.6.2

Ant Target: mqsicreatebar.buildbar

<target name="mqsicreatebar.buildbar" description="This ant target creates the BAR files using mqsicreatebar with referencing all required projects.">

<propertyregex property="argln_broker_prj" input="${broker.build.projects}" regexp="\," replace=" " global="true" defaultValue="${broker.build.projects}"/>

<for list="${numberOfExecGrps}" param="var-exec-grp-num" delimiter="${line.separator}">

<sequential>

<propertyregex property="argln_eg@{var-exec-grp-num}_msgflow" input="${exec_grp@{var-exec-grp-num}.msg.flows}" regexp="\," replace=" " global="true" defaultvalue="${exec_grp@{var-exec-grp-num}.msg.flows}"/>

<propertyregex property="argln_eg@{var-exec-grp-num}_msgset" input="${exec_grp@{var-exec-grp-num}.msg.sets}" regexp="\," replace=" " global="true" defaultValue="${exec_grp@{var-exec-grp-num}.msg.sets}"/>

<exec executable="${toolkit.home}/mqsicreatebar" spawn="false" failonerror="true" vmlauncher="false">

<arg value="-cleanbuild"/>

<arg value="-data"/>

<arg value="${toolkit.workspace}"/>

<arg value="-b" />

<arg value="${exec_grp@{var-exec-grp-num}.bar.file.path}" />

<arg value="${version}"/>

<arg value="${version.number}"/>

<arg value="-p" />

<arg line="${argln_broker_prj}"/>

<arg value="-o"/>

<arg line="${argln_eg@{var-exec-grp-num}_msgflow}"/>

<arg line="${argln_eg@{var-exec-grp-num}_msgset}"/>

</exec>

</sequential>

</for>

</target>

Step 3: Apply BAR Overrides:

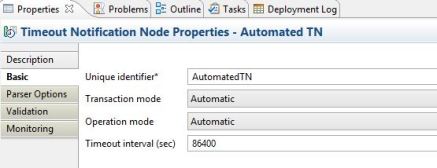

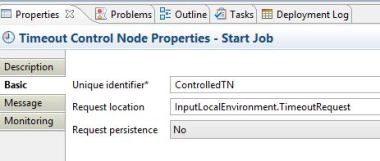

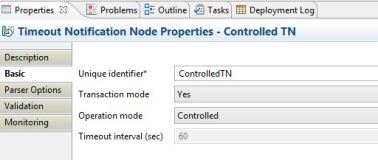

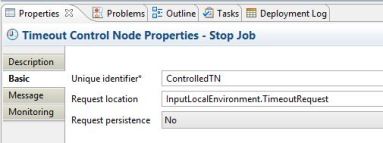

All configurable nodes in the message flow have some properties that needs to be changed as per the broker environment before deployment. For example, MQ/JMS nodes properties, Database nodes properties, Timeout nodes properties, HTTP/Web Service nodes properties or any promoted message flow properties which change from environment to environment.

An ant task can be configured to execute Broker MQSI command mqsiapplybaroverride for applying the environment specific configuration on the BAR file through a separate property file or manually on the command itself.

mqsiapplybaroverride -b barFile [-p overridesFile] [-m manualOverrides] [-o outputFile] [-v traceFile]

Parameters:

-b barFile (Required)

– The path to the BAR file (in compressed format) to which the override values apply. The path can be absolute or relative to the executable command.

-p overridesFile (Optional)

– The path to one of the following resources:

- A BAR file that contains the deployment descriptor that is used to apply overrides to the BAR file.

- A properties file in which each line contains a property-name=override or current-property-value=new-property-value pair.

- A deployment descriptor that is used to apply overrides to the BAR file.

-m manualOverrides (Optional)

– A list of the property-name=override pairs, current-property-value=override pairs, or a combination of them, to be applied to the BAR file. The pairs in the list are separated by commas (,). On Windows, you must enclose the list in quotation marks (” “). If used with the overridesFile ( –p) parameter, overrides specified by the manualOverrides (–m) parameter are performed after any overrides specified the –p parameter have been made.

-o outputFile (Optional)

– The name of the output BAR file to which the BAR file changes are to be made. If an output file is not specified, the input file is overwritten.

-v traceFile (Optional)

– Specifies that the internal trace is to be sent to the named file.

Note: Each override that is specified in a –p overrides file or a –m overrides list must conform to one of the following syntaxes:

1. FlowName#NodeName.PropertyName=NewPropertyValue (or FlowName#PropertyName=NewPropertyValue for message flow properties) where:

2. OldPropertyValue=NewPropertyValue. This syntax does a global search and replace on the property value OldPropertyValue. It overrides the value fields of OldPropertyValue in the deployment descriptor with NewPropertyValue.

3. FlowName#NodeName.PropertyName (or FlowName#PropertyName for message flow properties). This syntax removes any override applied to the property of the supplied name.

Sample Ant target for applying overrides:

mqsiapplybaroverride -b ../SampleProject.bar -p ../Sample_BarOverride_EG1.property

Sample contents of a BAR Override property file (Sample_BarOverride_EG1.property):

com.ibm.wmb.build.SampleMsgFlow1#additionalInstances=7

com.ibm.wmb.build.SampleMsgFlow1#Compute.dataSource=DB2_DSN

com.ibm.wmb.build.SampleMsgFlow1#MQ_Output.queueName=SAMPLE.PROD.OUT

Ant Target: mqsiapplybaroverride.modifybar

<target name="mqsiapplybaroverride.modifybar" description="This ant target applies overrides to the generated bar file from properties file.">

<for list="${numberOfExecGrps}" param="var-exec-grp-num" delimiter="${line.separator}">

<sequential>

<exec executable="${toolkit.home}/mqsiapplybaroverride" spawn="false" failonerror="true">

<arg value="-b" />

<arg value="${exec_grp@{var-exec-grp-num}.bar.file.path}" />

<arg value="-p" />

<arg value="${exec_grp@{var-exec-grp-num}.property.file}" />

</exec>

</sequential>

</for>

</target>

Before applying overrides: (broker.xml inside BAR file)

<ConfigurableProperty uri="com.ibm.wmb.build.SampleMsgFlow1#additionalInstances"/>

<ConfigurableProperty override="DB2_DSN_DEV" uri="com.ibm.wmb.build.SampleMsgFlow1#Compute.dataSource"/>

<ConfigurableProperty override="SAMPLE.DEV.OUT" uri="com.ibm.wmb.build.SampleMsgFlow1#MQ_Output.queueName"/>

While applying the overrides:

BIP1137I: Applying overrides using toolkit mqsiapplybaroverride...

BIP1140I: Overriding property com.ibm.wmb.build.SampleMsgFlow1#additionalInstances with '7'...

BIP1140I: Overriding property com.ibm.wmb.build.SampleMsgFlow1#Compute.dataSource with 'DB2_DSN'...

BIP1140I: Overriding property com.ibm.wmb.build.SampleMsgFlow1#MQ_Output.queueName with 'SAMPLE.PROD.OUT'...

BIP1143I: Saving Bar file ../TEAM1/generated_bars/PROD/PRJ1_TEAM1_2013-08-27_02-41-21_19_EG1.bar...

BIP8071I: Successful command completion.

After applying overrides: (broker.xml inside BAR file)

<ConfigurableProperty override="7" uri="com.ibm.wmb.build.SampleMsgFlow1#additionalInstances"/>

<ConfigurableProperty override="DB2_DSN" uri="com.ibm.wmb.build.SampleMsgFlow1#Compute.dataSource"/>

<ConfigurableProperty override="SAMPLE.PROD.OUT" uri="com.ibm.wmb.build.SampleMsgFlow1#MQ_Output.queueName"/>

Step 4: Ftp the BAR file(s) to remote Broker server:

After creating and overriding the configurations, the BAR files need to be moved to Broker server for the deployment to the broker.

An ant task can be configured to sftp the BAR files after the successful execution of above two steps. This step will ftp the generated bar file(s) from Hudson server to the remote broker server using SSH public key authentication which returns the ftp status code back to Hudson so that successor steps will not be executed if ftp has failed.

Sample Ant target to ftp the BAR files to Broker server:

Ant Target: sftp.bar.to.broker.server

<target name="sftp.bar.to.broker.server" description="This ant target ftps the BAR file to remote Broker server.">

<for list="${numberOfExecGrps}" param="var-exec-grp-num" delimiter="${line.separator}">

<sequential>

<scp file="${exec_grp@{var-exec-grp-num}.bar.file.path}" todir="${USER}@${HOST}:${REMOTE_PATH}" keyfile="${user.home}/.ssh/id_rsa" failonerror="true"/>

</sequential>

</for>

</target>

Step 5: Deploy BAR file(s):

Broker Runtime installation provides a bunch of command line tools which help in deploying the BAR files to broker and manages(create/delete) Broker/Execution Group etc. The Broker runtime command mqsideploy is used to make deployment requests of all types from a batch command script, without the need for manual interaction. The default operation is a delta or incremental deployment. We can select -m to override the default operation and perform a complete deployment.

An ant task can be configured to execute the MQSI runtime command mqsideploy which retrieves the BAR files from the directory and deploys them on the Broker locally in sequence.

mqsideploy brokerSpec -e execGrpName ((-a barFileName [-m]) | -d resourcesToDelete) [-w timeoutSecs] [-v traceFileName]

Parameters:

‘brokerSpec’ (Required)

– You must specify at least one parameter to identify the target broker for this command, in one of the following forms:

- ‘brokerName’ : Name of a locally defined broker. You cannot use this option if the broker is on a remote computer.

- ‘-n brokerFileName‘ : File containing remote broker connection parameters (*.broker).

- ‘-i ipAddress -p port -q queueMgr‘ : Hostname, Port and Queue manager of a remote broker.

‘-e execGrpName‘ (Optional)

– Name of execution group to which to deploy

‘-a barFileName‘ (Optional)

– Name of the broker archive (BAR) file that is to be used for deployment of the message flow and other resources.

‘-m’ (Optional)

– Empties the execution group before deployment(Full deployment)

‘-d resourcesToDelete‘(Optional)

– Deletes a colon-separated list of resources from the Execution group

‘-w timeoutSecs‘ (Optional)

– Maximum number of seconds to wait for the broker to respond (default is 60)

‘-v traceFileName‘ (Optional)

– Send verbose internal trace to the specified file.

Sample Ant target for deploying BAR file:

mqsideploy BRK7000 -e POC -a ../Sample.bar -m -w 600

Ant Target: mqsideploy.deploybar

<target name="mqsideploy.deploybar" description="This ant target deploys the BAR file to broker server remotely.">

<for list="${numberOfExecGrps}" param="var-exec-grp-num" delimiter="${line.separator}">

<sequential>

<sshexec host="${HOST}" username="${USER}" keyfile="${user.home}/.ssh/id_rsa" command=". ~/.bash_profile; ${MQSI_HOME}/mqsideploy ${BROKER} -e ${EXEC_GRP@{var-exec-grp-num}} -a ${REMOTE_PATH}/${exec_grp@{var-exec-grp-num}.bar.file.name} ${deploy.mode} -w 600" failonerror="true"/>

</sequential>

</for>

</target>

Step 6: Subversion Tagging/Branching:

There are two different ways in which snapshot of the build(in this case, tagging or branching) can be taken.

1. [Typical] Hudson by default provides a configuration to take snapshot of the resources once the build is complete. This option is preferred when the SCM location URL is explicitly configured in the Hudson job and resources are static for all the builds.

2. [Custom]Ant target can be written to take snapshot of the the resources once the build is complete. This option is preferred when the SCM location URL is not explicitly configured in the Hudson job and resources are not static for all the builds.

Sample Ant target for creating Subversion Tagging/Branching:

Below sample Ant target tags the last successful/stable build in Subversion at the tags level of each broker project which involved in the build with current Build id and with comments so that the higher environment build can be configured to refer to take the source from the tagged successful /stable build.

Ant Target: subversion.tagging.branching

<target name="subversion.tagging.branching" description="This target connects to svn and creates the snapshot(tag/branch) from the build code to the respective project.">

<for list="${broker.build.projects}" param="var-broker-prj">

<sequential>

<svn javahl="false" svnkit="true" username="${svn.username}" password="${svn.password}">

<copy srcUrl="${svn.url}/@{var-broker-prj}/${source.location}" destUrl="${svn.url}/@{var-broker-prj}/${snapshot.location}/last-successful/${env.SNAPSHOT_NAME}" message="Tagging Project @{var-broker-prj} with tag name ${env.SNAPSHOT_NAME} from ${source.location}."/>

</svn>

</sequential>

</for>

</target>

Auto Build Deploy Framework Install and Set up:

1. Hudson Set up and Configuration: Download Hudson war file and install on the platform[Windows or Linux]. Create Hudson Job with the similar configuration from the Sample config file in Downloads.

2. WMB Toolkit Installation and Folder structure: Install WMB toolkit on Hudson server along with FP patch and create a toolkit workspace.

3. Master Ant Build Project structure: Import the Broker build master project from Downloads to Broker toolkit workspace and modify the variables like SVN, broker server, user id, password etc.

4. WMB Project setup: Create a folder called “build” in the main Msg flow project for which you want to trigger the build and add the property files and build ant file for this project.

Hudson Parameters for triggering the Broker Build/Deploy: